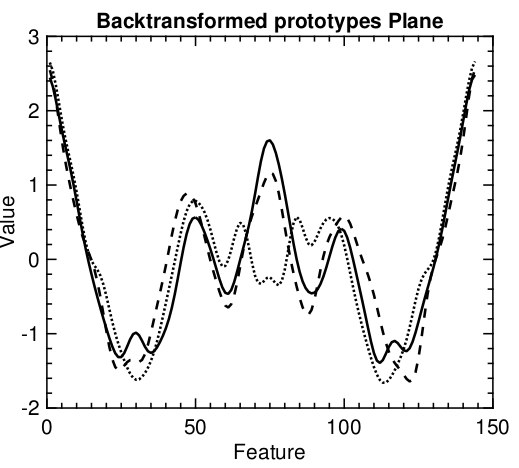

Prototypes that were efficiently learnt in Fourier space backtransformed to the time domain

Prototypes that were efficiently learnt in Fourier space backtransformed to the time domain

Abstract

In this contribution, we consider the classification of time-series and similar functional data which can be represented in complex Fourier coefficient space. We apply versions of Learning Vector Quantization (LVQ) which are suitable for complex-valued data, based on the so-called Wirtinger calculus. It makes possible the formulation of gradient based update rules in the framework of cost-function based Generalized Matrix Relevance LVQ (GMLVQ). Alternatively, we consider the concatenation of real and imaginary parts of Fourier coefficients in a real-valued feature vector and the classification of time domain representations by means of conventional GMLVQ.

Type

Publication

2017 12th International Workshop on Self-Organizing Maps and Learning Vector Quantization, Clustering and Data Visualization (WSOM)