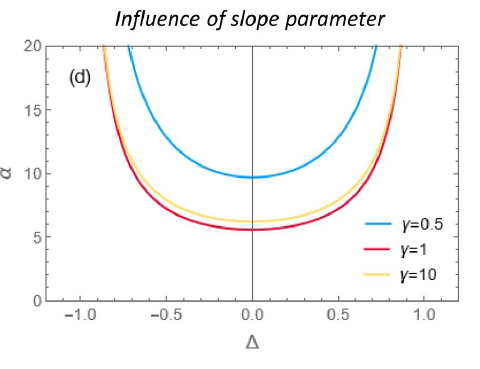

GELU has continuous phase transitions regardless of the slope parameter, but its location (dataset size) is dependent on the slope parameter.

GELU has continuous phase transitions regardless of the slope parameter, but its location (dataset size) is dependent on the slope parameter.

Abstract

The GELU activation function is similar to the popular swish and ReLU. Recent work shows that ReLU soft committee machines display a continuous phase transition, while SCMs with the sigmoidal erf show a discontinuous transition in the learning curves. Our result shows that the GELU-SCM also has a continuous phase transition towards specialized hidden units after a critical number of training examples. This rules out the hypothesis that the convexity of the ReLU is causing the continuous transition, since the GELU is non-convex and shows the same transition.

Type

Publication

Off-line Learning Analysis for Soft Committee Machines with GELU Activation