Layered Neural Networks with GELU Activation, a Statistical Mechanics Analysis

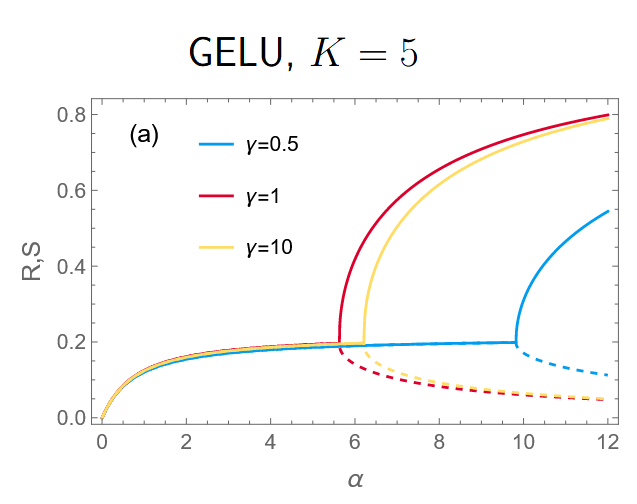

Order parameters vs. relative data set size for a network with 5 hidden units and different GELU slope parameters.

Order parameters vs. relative data set size for a network with 5 hidden units and different GELU slope parameters.

Abstract

Understanding the influence of activation functions on the learning behaviour of neural networks is of great practical interest. The GELU, being similar to swish and ReLU, is analysed for soft committee machines in the statistical physics framework of off-line learning. We find phase transitions with respect to the relative training set size, which are always continuous. This result rules out the hypothesis that convexity is necessary for continuous phase transitions. Moreover, we show that even a small contribution of a sigmoidal function like erf in combination with GELU leads to a discontinuous transition.

Type

Publication

Off-line Learning Analysis for Soft Committee Machines with GELU Activation